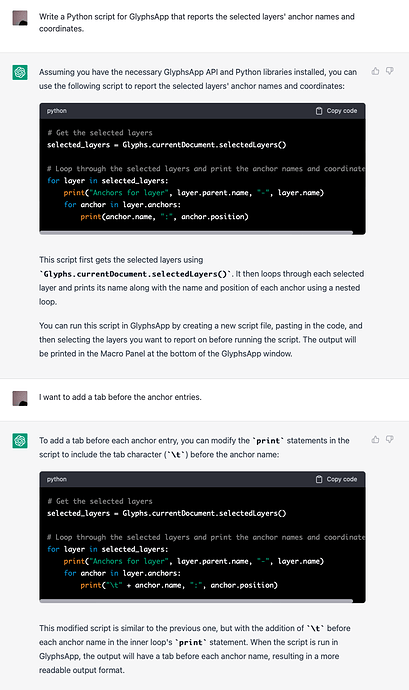

I just wanted to share my experiment with ChatGPT, which can apparently write pretty good Python code for Glyphs if you explain well. Attached image is a quite simple query example, but I have managed to make it generate more complex stuff including viewer plugins (with some rounds of feedback). It’s terrific and terrifying.

nice! I have been using chatgpt for glyphs, drawbot, random fonttools scripts… in typescript/react and go too. I wouldn’t say I am terrified but I am surprised by how good it is. not always, but a lot of the time. what I have found is that sometimes, when the problem gets too obscure, it is very confidently wrong about things, and will start making random suggestions like installing a library version that don’t exist

it’s great at refactoring python too, if you ask it to make something more pythonic, or ask it to make an algorithm more efficient

If you’re happy using ChatGPT, I very much recommend GitHub Copilot, if you haven’t tried that already. Integrates perfectly with JetBrains IDEs such as PyCharm. There’s even a third-party plugin that lets it integrate with XCode. Trained on the same model (GPT3), just different training data.

I had less luck with ChatGPT. It took my correction suggestions in plain English, but it would not produce runnable code, not even after 10 iterations.

I saw it make up PyObjC method names though, rather than producing actual code. ![]()

So it’s not going to replace mekkablue—the OG-ChatCPT!—just yet;-)

After seeing this post I tried it for a simple script I wanted to write to assist with, coincidently, Toshi’s BubbleKern.

Prompted it this: Write a Python script for GlyphsApp that adds 10 units of LSB to the selected glyph in the selected layer, and if there is a layer in the same master named “bubble” then add the same units of LSB.

It quickly generated some code that was 99% accurate only messed up on one line using .leftMetrics in selected_layer.leftMetrics += lsb_offset instead of using .LSB.

I can see this being incredibly helpful though!

Is it actually trained with the Glyphs API and all the open-source glyph scripts/plugins (I know very little of how it all works) or can it be?

I would be curious how other people word/structure prompts to get more accurate results.

I’ve had less luck with CoPilot, for me it is more of a powerful autocomplete, whereas ChatGPT is more general purpose / I can actually work through things with it. Maybe I haven’t read enough CoPilot docs to know how and where it is most powerful.

I am definitely more impressed by both CoPilot and ChatGPT when doing web development where the training corpus is enormous.

Seems like it can scan APIs and even work with articles, but sometimes you need to tell it to double-triple-… check stuff. Interestingly, it told me it can’t read forums but seems like has no problems with articles.

After using it for a few days, I am finding it helpful in creating short snippets for looping through masters or adding/moving/removing certain things but it seems to struggle with other seemingly simple tasks.

For example: For GlyphsApp version 3 make a basic vanilla preview window that displays the currently selected glyph and it just can’t figure it out…it adds modules/methods that don’t exist even after I feed it back the error messages, working snippets, and link to Glyphs API.

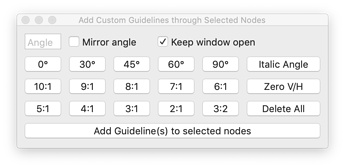

Got to say that it is a really fascinating experience using this. We (yes, we) managed to make this fairly involved (at least for an amateur coder like myself) script that can add guidelines to nodes at custom or preset angles and ratios.

I had the basic idea of what it should do and started asking it to build things up slowly. Things like: make a window then add a button then trigger that button to add a guideline. A working prototype came together quickly but then it was a lot of back and forth getting to add all the other functionality, exceptions, checks, etc.

As wholly impressive as it is, there are times when it can become frustrating because apparently there is a some sort of character limit so at a certain point it will just stop generating mid-way if there is too much. Then when you try to ask it to remove something or make it simpler or only do a certain part it would get confused or change things that somehow introduce an error. What is interesting though that sometimes when you feed back the error message it can fix it (or not).

It was quite a long process but certainly not as long as it would be if I had to dedicate the time to figure it out by finding similar scripts, posting on the forum, stackoverflow, youtube etc…it actually probably wouldn’t even have the same result because I find so many ideas come in the moment while testing iterations that it becomes quite easy to ask it “can we also try to add a button that does this…” and have a new prototype in a short amount of time.

All this to say that I hope it encourages more people who have ideas to make more tools and not let python skill level slow you down.

If anyone would like to try out the script, it is available in the newly minted github repo gorjious-glyph-scripts

I noticed the length limit too. I think it might be better to make a separate inquiry thread entirely and ask for smaller chunks, assuming you know how to connect them.

There are chrome extensions that allow the user to create complex prompts in ChatGPT. (AIPRM for example)

It work pretty well !

Had to do that a few times. Just have to be wary of it changing the names of variables, methods, and such.

Oh cool will check it out.

Here’s another type of code generator that may be of interest. Uses education-standard pseudocode as input. I have not tried it but intend to.