Yes, a Git repository on GitHub can be the most suitable option.

Hey Jeremy (@tribby),

Thanks for sharing Petr van Blokland’s talk, “Combining ML & rule-based digital assistants to create short design cycles for type design projects.” It’s exactly the direction I’m exploring.

Are you in touch with Petr? I saw his project is semi-open-source—do you have any more details?

I’ll create a new repository on GitHub. In its early stages, it might be a good idea to temporarily make it ‘semi-open’?

Who would like to be invited? @GeorgSeifert

I’ll add TODO.md file this week to summarize what I’ve collected so far.

I’m very interested in the project.

Do you have a github account ?

I’ve added a README.md file to the project on GitHub.

Here’s the link: https://github.com/thierryc/Glyphs-mcp (Private repo for now)

Please send me your GitHub account page, and I’ll add you to the project.

@GeorgSeifert @tribby, you should have received an invitation from GitHub.

Title: Minimal MCP-Server Plugin (FastMCP) – Start/Stop from Edit Menu

Hi everyone,

I’ve put together a very small Glyphs plugin that lets you spin up (or shut down) a local FastMCP server straight from the Edit menu:

What it does

- Boots a FastMCP SSE endpoint (http://127.0.0.1:3845/sse) so external agents (Claude, ChatGPT, etc.) can talk to Glyphs via the MCP protocol.

- Keeps all server code inside the plugin bundle—no global installs or extra CLI steps.

- Cleanly tears everything down when you choose Stop or quit Glyphs.

Tech notes

- Written in Python 3.11 (works with any 3.10+ interpreter you point Glyphs at).

- Uses **fastmcp >= 2.0 under the hood.

If anyone wants to:

- review the structure,

- test on Intel vs. Apple-silicon setups,

I push it to GitHub.

Thanks!

— Thierry

The server still advertises zero tools, so agents have nothing to call.

Let’s choose a starter set—compact “actions” like getSelection or applyKerning, a few status reads, maybe batch ops—drop them into the manifest.

Starter tool list:

| Tool | Purpose |

|---|---|

| getFonts() | List all open fonts |

| getFont(fontId) | JSON: metadata, masters, instances… |

| getSelection() | IDs/names of selected glyphs |

| applyKerning(pairs) | Batch-apply kerning values |

| saveFont(fontId) | Force-save a font |

Feel free to toss more ideas into Jeremy’s “demo” issue on GitHub (semi-open) so we can hash them out there.

https://github.com/thierryc/Glyphs-mcp/issues/1

GitHub (semi-open) who want an access?

Heads-up: an easy trap is crafting tools that hard-code one workflow. Aim for flexible, low-level primitives instead—agents will mix and match them in ways we can’t predict.

Hey there—great to hear you’re interested!

Just send me your GitHub handle (the link to your GitHub page) and I’ll add you as a collaborator.

Let me know if you run into any issues or have questions. Happy hacking!

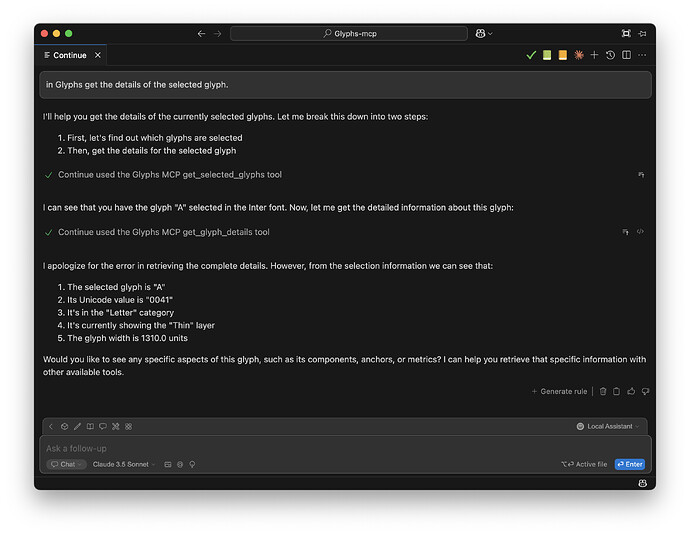

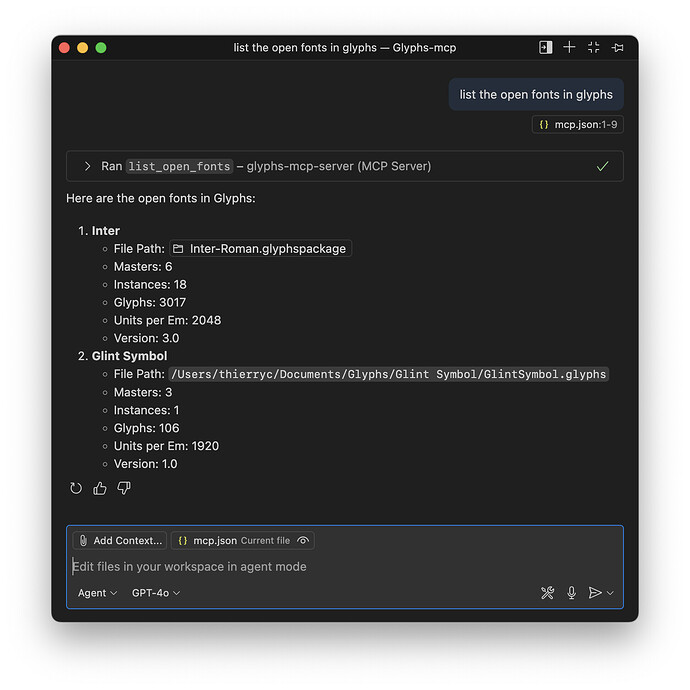

Working with the Continuous Integration plugin in Visual Studio Code.

There’s a list of available tools for a proof of concept.

Glyphs MCP Server started successfully!

• Port: 9680

• SSE endpoint: http://127.0.0.1:9680/sse

• Available tools: 6 tools

• Tools available:

- list_open_fonts

- get_font_glyphs

- get_font_masters

- get_font_instances

- get_glyph_details

- get_font_kerning

• Server running in background (daemon thread)

In the branch https://github.com/thierryc/Glyphs-mcp/tree/feat/add-initial-mcp-tool

This branch is currently undergoing a heavy development process. For the moment, only the list of open fonts has been tested.

A very minimal MCP server is now starting to work! ![]()

I’ve tested it successfully using Continue and Claude Sonnet via the Anthropic API.

It should also be compatible with other tools that support the MCP protocol like Claude App, Cursor, Windsurf, or the OpenAI ChatGPT API.

It’s still early, but we’re starting to see real progress!

Tools available:

- list_open_fonts

- get_font_glyphs

- get_font_masters

- get_font_instances

- get_glyph_details

- get_font_kerning

- create_glyph

- delete_glyph

- update_glyph_properties

- copy_glyph

- update_glyph_metrics

- add_component_to_glyph

- add_anchor_to_glyph

- set_kerning_pair

- get_selected_glyphs

- get_selected_font_and_master

- save_font

Hello everyone,

I wanted to share an update regarding the tools currently available:

- list_open_fonts

- get_font_glyphs

- get_font_masters

- get_font_instances

- get_glyph_details

- get_font_kerning

- create_glyph

- delete_glyph

- update_glyph_properties

- copy_glyph

- update_glyph_metrics

- add_component_to_glyph

- add_anchor_to_glyph

- set_kerning_pair

- get_selected_glyphs

- get_selected_font_and_master

- save_font

This week, I plan to expand the toolset by adding functions related to shape and glyph path manipulation.

I’d greatly appreciate any suggestions or feedback you might have. Feel free to share your thoughts here or contribute directly on our semi-public GitHub repository.

Looking forward to your ideas and input!

I add 2 new tools ![]()

“get_glyph_paths”,

Get the path data for a glyph in a simple JSON format suitable for LLM editing.

Args:

font_index (int): Index of the font (0-based). Defaults to 0.

glyph_name (str): Name of the glyph. Required.

master_id (str): Master ID. If None, uses the current selected master. Optional.

Returns:

str: JSON-encoded path data containing:

paths (list): List of paths, each containing:

nodes (list): List of nodes with x, y, type, smooth properties

closed (bool): Whether the path is closed

width (int): Glyph width

leftSideBearing (int): Left side bearing

rightSideBearing (int): Right side bearing

“set_glyph_paths”,

Set the path data for a glyph from JSON, replacing existing paths.

Args:

font_index (int): Index of the font (0-based). Defaults to 0.

glyph_name (str): Name of the glyph. Required.

master_id (str): Master ID. If None, uses the current selected master. Optional.

paths_data (str): JSON string containing path data in the format returned by get_glyph_paths. Required.

Returns:

str: JSON-encoded result with success status.

Exciting Update: Now Integrated with Streamable HTTP Protocol!

Hello,

We’re thrilled to announce a significant update to our project! In addition to its current functionality with Continue Plugin and Copilot in VS Code, we have now built it over the Streamable HTTP protocol to align with the latest Protocol Revision dated 2025-03-26.

This enhancement ensures improved performance, better compatibility, and a more streamlined experience for all users.

We look forward to your feedback and are eager to hear your thoughts on this new update!

Best,

Thierry

What I would hope to soon be a task that AI could handle would be hinting. To me, this is drudgery. Either this could be part of the application itself, or an add-on.

Absolutely — I think we’re not too far from making this possible for hinting, kerning, and spacing. These are repetitive, rules-based tasks that AI can already assist with in early stages. It just needs the right integration into the workflow — whether as part of the app or a plugin.

Follow the link previously shared by @tribby

petr van blokland had a good talk about “sketching” with software/AI at ATD3 ATD3_2-08 Petr VAN BLOKLAND Combining ML & rule based digital assistants to create short design cycles for type design projects

Thanks, Thierry! I would hope hinting would be 1st order of choice, kerning is a distant 2nd ![]() Actually, Kerning should be well learned manually by anyone in the field before the task is passed on to the AI bots. That way, they can see if they agree with the bot’s decision making and perhaps correct it.

Actually, Kerning should be well learned manually by anyone in the field before the task is passed on to the AI bots. That way, they can see if they agree with the bot’s decision making and perhaps correct it.

Totally agree.

That’s also why I’d love to keep control of the final touch. But if AI can handle a rough pass and follow some high-level instructions or style references, that would save time without compromising quality.

For hinting: what kind of inputs or control would be useful to you? I can start adding tools to the MCP server. Maybe we could define target sizes, direction preferences, or even glyph categories? Open to ideas.